Bridging the Gap: Transitioning from Data Lakes to Data Fabrics | Part1

In today's data-driven world, organizations are constantly seeking ways to manage and leverage vast amounts of data efficiently. Data Lakes and Data Fabrics are two prominent approaches that have emerged to address these needs. While both offer unique advantages, the shift from traditional Data Lakes to more sophisticated Data Fabrics marks a significant evolution in data management. This article, part one of a two-part series, delves into the key differences between these two technologies and explores the benefits of transitioning to Data Fabrics.

Understanding Data Lakes

Data Lakes are centralized repositories designed to store large volumes of raw data in its native format. This data can include structured, semi-structured, and unstructured data from various sources. The primary goal of a Data Lake is to provide a scalable and cost-effective solution for storing massive datasets.

Key Characteristics of Data Lakes

Data Storage

Data Lakes offer centralized storage for large volumes of data, accommodating diverse data types such as structured (e.g., relational databases), semi-structured (e.g., JSON, XML), and unstructured data (e.g., text, images, videos). This centralized approach allows organizations to consolidate data from multiple sources into a single repository.

Advantages:

- Scalability: Capable of handling petabytes of data.

- Flexibility: Store data in its raw format without the need for immediate processing.

Challenges:

- Complex Management: Managing a centralized repository can be challenging, especially as data volumes grow.

Schema

Data Lakes typically use a schema-on-read approach. This means that data is stored in its raw form without enforcing a predefined schema. The schema is applied when the data is read or queried, allowing for greater flexibility.

Advantages:

- Flexibility: Allows for the storage of varied data types without upfront schema design.

- Adaptability: Easier to accommodate changes in data structure over time.

Challenges:

- Query Complexity: Queries can become complex as the schema needs to be applied at read time.

- Data Quality: Ensuring data quality without a predefined schema can be difficult.

Data Integration

Data Lakes offer basic data integration capabilities, often requiring manual intervention to ingest and process data from various sources. This can involve custom scripts or ETL (Extract, Transform, Load) processes.

Advantages:

- Versatility: Can ingest data from a wide range of sources.

- Cost-Effective: Initial setup costs can be lower compared to more automated solutions.

Challenges:

- Manual Effort: Integration processes can be labor-intensive and prone to errors.

- Inconsistency: Manual processes can lead to inconsistencies in data quality and structure.

Governance

Governance in Data Lakes can be challenging without proper policies and frameworks. Effective data governance involves managing data quality, security, and compliance.

Advantages:

- Control: With proper governance, organizations can maintain control over their data assets.

- Security: Implementing robust security measures can protect sensitive data.

Challenges:

- Complexity: Governance can be complex and resource-intensive.

- Risk: Poor governance can lead to data quality issues and compliance risks.

Use Cases

Data Lakes are ideal for batch processing, data science, and machine learning applications. They provide a repository for storing large datasets that can be analyzed and processed using various tools and frameworks.

Advantages:

- Data Science: Supports advanced analytics and machine learning workflows.

- Research and Development: Facilitates experimentation with large datasets.

Challenges:

- Performance: Batch processing can be time-consuming and resource-intensive.

Performance

Performance in Data Lakes can be variable, depending on the size and type of data being processed. Factors such as data volume, query complexity, and hardware resources can impact performance.

Advantages:

- Scalability: Capable of handling large-scale data processing tasks.

- Flexibility: Can support a wide range of analytical workloads.

Challenges:

- Inconsistency: Performance can vary, affecting the reliability of insights.

- Optimization: Requires continuous optimization to maintain performance levels.

Cost

Data Lakes provide cost-effective storage solutions, particularly for archival and historical data. They leverage scalable storage technologies that can grow with organizational needs.

Advantages:

- Economical: Lower storage costs compared to traditional data warehouses.

- Scalable: Pay-as-you-go models for storage can align with budget constraints.

Challenges:

- Hidden Costs: Costs associated with data management, integration, and governance can add up over time.

Advantages of Data Lakes

Let’s have a look at some of the advantages that Data Lakes offers to the businesses:

Scalability

Data Lakes can effortlessly scale to accommodate massive amounts of data, growing from terabytes to petabytes without the need for complex restructuring or expensive upgrades. This scalability is crucial for organizations that generate large volumes of data or anticipate rapid data growth.

Flexibility

Data Lakes offer unmatched flexibility in terms of the types of data they can store. They can handle structured data (e.g., relational databases), semi-structured data (e.g., JSON, XML), and unstructured data (e.g., text, images, videos). This flexibility allows organizations to consolidate diverse data sources into a single repository.

Cost-Effective Storage

Data Lakes provide a cost-effective solution for storing large volumes of data. They typically leverage low-cost storage solutions, such as cloud storage, which offer economical options for both short-term and long-term data storage.

Data Agility

With Data Lakes, data can be ingested and stored without the need for immediate structuring or transformation. This schema-on-read approach allows organizations to store data as-is and apply the necessary structure and transformations only when the data is read or analyzed.

Advanced Analytics and Machine Learning

Data Lakes are particularly well-suited for advanced analytics and machine learning applications. By storing raw data in its native format, Data Lakes provide data scientists and analysts with the flexibility to explore and experiment with large datasets using various tools and frameworks.

Simplified Data Management

Centralizing data storage in a Data Lake simplifies data management by consolidating data from multiple sources into a single repository. This centralization reduces data silos, improves data accessibility, and streamlines data governance processes.

What are Data Fabrics?

Data Fabrics represent a more advanced approach to data management, offering a unified architecture that connects disparate data sources in a seamless, automated, and real-time manner. Unlike Data Lakes, Data Fabrics focus on integrating data from various environments, whether on-premises, cloud, or hybrid.

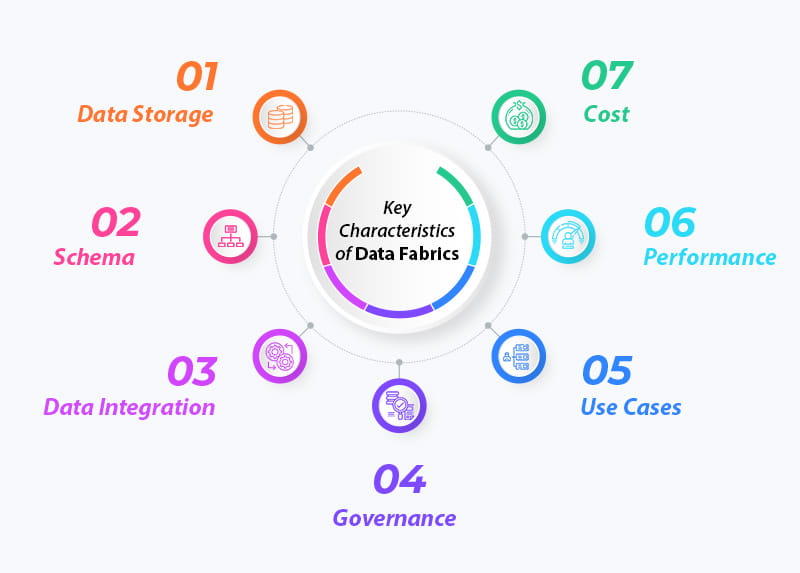

Key Characteristics of Data Fabrics

Data Storage

Data Fabrics provide a distributed storage system that interconnects various data sources, both on-premises and cloud-based. This interconnected approach ensures that data from different environments can be seamlessly accessed and integrated, providing a comprehensive view of organizational data.

Benefits:

- Seamless Access: Users can access data from multiple sources without moving it to a central location.

- Flexibility: Integrates with existing infrastructure, reducing the need for data migration.

Schema

Similar to Data Lakes, Data Fabrics often use a schema-on-read approach, but with enhanced flexibility. This allows for the dynamic application of schemas as data is accessed, supporting diverse data formats and structures.

Benefits:

- Dynamic Structuring: Apply schemas on-the-fly based on analytical needs.

- Support for Diversity: Handle various data types and formats seamlessly.

Data Integration

Data Fabrics excel in advanced, automated, real-time data integration. They use intelligent data orchestration to automate the process of integrating data from different sources, ensuring that data is always up-to-date and consistent across the organization.

Benefits:

- Real-Time Integration: Automatically integrate data in real-time, providing timely insights.

- Consistency: Maintain data consistency across various sources and environments.

Governance

Data Fabrics come with built-in, automated governance features. These include data quality management, security, and compliance monitoring, ensuring that all data within the fabric adheres to organizational policies and regulatory requirements.

Benefits:

- Automated Compliance: Ensure data meets regulatory standards without manual intervention.

- Data Quality: Maintain high data quality with automated checks and balances.

Use Cases

Data Fabrics are designed for real-time analytics and cross-environment data integration. They support use cases that require immediate insights from data spread across different systems, such as real-time customer analytics, operational intelligence, and agile business processes.

Benefits:

- Real-Time Analytics: Enable instant insights and decision-making.

- Cross-Environment Integration: Seamlessly integrate data from various environments.

Performance

Data Fabrics deliver optimized, consistent performance by leveraging advanced data processing techniques and distributed computing. This ensures that data queries and analytics run efficiently, regardless of data volume or complexity.

Benefits:

- Consistent Performance: Reliable performance for all data workloads.

- Efficiency: Optimize resource utilization and query processing.

Cost

Data Fabrics are cost-efficient across different environments, balancing on-premises and cloud resources to optimize costs. They enable organizations to leverage existing investments while taking advantage of the scalability and flexibility of cloud services.

Benefits:

- Cost Optimization: Efficiently manage costs across diverse data environments.

- Scalable Solutions: Scale resources up or down based on demand, controlling expenditure.

Advantages of Data Fabrics

Real-Time Data Integration

Data Fabrics automate and streamline data integration processes, enabling real-time access and insights. This capability is crucial for organizations that require immediate data availability for decision-making and operational efficiency.

Benefits:

- Immediate Insights: Ensures the latest data is always available for analysis.

- Operational Efficiency: Reduces manual effort and minimizes errors.

- Customer Responsiveness: Businesses can respond to customer needs more quickly with real-time data.

Enhanced Governance

Data Fabrics come with built-in, automated governance features that ensure data quality, security, and compliance. This includes data cataloging, metadata management, and policy enforcement.

Benefits:

- Data Quality: Automated processes ensure data remains accurate and reliable.

- Security and Compliance: Built-in measures protect sensitive data and ensure regulatory adherence.

- Policy Enforcement: Consistent application of governance policies across all data sources.

Optimized Performance

Data Fabrics deliver optimized performance by leveraging advanced data processing techniques and distributed computing. This ensures efficient data queries and analytics, regardless of data volume or complexity.

Benefits:

- Reliable Performance: Consistent performance for all data workloads.

- Efficiency: Faster data retrieval and analysis.

- Scalability: Handles increasing data volumes without performance degradation.

Cost Efficiency

Data Fabrics manage costs efficiently across different environments by optimizing the use of on-premises and cloud resources. They enable organizations to leverage existing investments while benefiting from cloud scalability.

Benefits:

- Cost Optimization: Balances on-premises and cloud resources.

- Scalable Solutions: Scale resources based on demand.

- Investment Protection: Utilize existing infrastructure investments.

Why Transition from Data Lakes to Data Fabrics?

Addressing Limitations of Data Lakes

While Data Lakes offer significant benefits, they also have limitations that can hinder organizational efficiency and effectiveness. Challenges such as complex data integration, inconsistent governance, and variable performance can create bottlenecks and reduce the overall value derived from data. Transitioning to Data Fabrics helps address these limitations by providing a more robust and efficient data management solution.

Enhancing Data Integration

Data integration is a critical aspect of effective data management. Data Lakes often require manual intervention to integrate and process data from various sources, which can be time-consuming and error-prone. Data Fabrics automate this process, ensuring seamless and real-time data integration across different environments. This automation reduces manual effort, minimizes errors, and provides immediate access to the latest data, enhancing decision-making and operational efficiency.

Improving Data Governance

Effective data governance is essential for maintaining data quality, security, and compliance. Data Lakes can struggle with governance due to the lack of built-in governance features and the challenges associated with managing large volumes of diverse data. Data Fabrics come with automated governance features, including data cataloging, metadata management, and policy enforcement, ensuring consistent data quality and compliance across all data sources.

Optimizing Performance

Performance variability is a common issue with Data Lakes, especially when dealing with large datasets and complex queries. Data Fabrics optimize performance by leveraging advanced data processing techniques and distributed computing. This ensures consistent and efficient data queries and analytics, regardless of data volume or complexity, providing a more reliable and faster data processing solution.

Achieving Cost Efficiency

Managing costs effectively is crucial for any organization. Data Lakes offer cost-effective storage solutions, but as data volumes grow, the costs associated with data management, integration, and governance can add up. Data Fabrics provide a more cost-efficient solution by optimizing the use of on-premises and cloud resources. They enable organizations to leverage existing investments while benefiting from the scalability and flexibility of cloud services, leading to better cost management and savings.

Enabling Real-Time Analytics

In today's fast-paced business environment, real-time analytics are essential for gaining timely insights and making informed decisions. Data Lakes primarily support batch processing, which can delay the availability of insights. Data Fabrics enable real-time data integration and analytics, providing immediate access to actionable insights and allowing organizations to respond quickly to market changes and customer needs.

Supporting Cross-Environment Integration

Modern data environments are often hybrid, with data distributed across on-premises, cloud, and edge locations. Data Lakes are primarily centralized, which can limit their ability to integrate and manage data from multiple environments effectively. Data Fabrics are designed to support cross-environment integration, providing a unified view of data from diverse sources and enabling seamless data management across different environments.

Conclusion

Transitioning from Data Lakes to Data Fabrics is driven by the need to overcome the limitations of traditional Data Lakes and achieve more efficient, automated, and real-time data management solutions. By enhancing data integration, improving governance, optimizing performance, achieving cost efficiency, enabling real-time analytics, and supporting cross-environment integration, Data Fabrics offer a comprehensive solution for modern data management challenges. While looking into the aspects and benefits of Data Lakes and Data Fabrics, connect with our experts, to identify which technology is better for your business.

Organizations that embrace Data Fabrics can unlock the full potential of their data, driving more informed decision-making, improving operational efficiency, and achieving greater business agility. In the second part of this series, we will explore how businesses can move their data from Data Lakes to Data Fabrics, and how Espire can help is smooth and hassle-free transition.